¶01. Introduction

This semester, I enrolled in Mindfulness, AI, and Ethics: Cultivating the Heart of the Algorithm. It’s a continuation of the classes I’ve been taking with Chris Berlin, Instructor in Ministry Studies and Pastoral Counseling, and his teaching assistant Tajay Bongsa, a former Buddhist monk. This is the fourth class I’ve taken with them (I had to withdraw early last semester due to some personal conflicts), and their classes have informed much of the changes I’ve seen within myself in these past two years. This class is the first time it has been taught, and given my long career in tech and imperfect meditation practice, I signed up just as soon as the course was available. The class has spent considerable time on both the mindfulness aspect (e.g., what and how to practice mindfulness, brief history of Buddhism, the Noble Eightfold Path) and the exploration of how AI is impacting lives, both on a societal and individual level.

Instead of papers this semester, we focused on one project throughout it. We had to create something1 through three iterations, which were called sketches. The first sketch had to be designed entirely without the use of AI. The second iteration was to be designed only by AI. And the third and final iteration was to be a collaboration between the student and AI. For the second iteration, we had to use a minimum of two AI services and compare/contrast the results between them, as well as analyzing the results across four tiers: Creativity, Accuracy, Comprehensiveness, and Role Support.

I use AI on an almost daily basis. It has become an oft-used tool in my day job of building websites and apps, and has increased my coding efficiency and enjoyment. However, using it in this manner is transactional and clear-cut. I’ve found a way of prompting the AI to give me pretty decent results fairly quickly without having to iterate through additional prompts. I keep the tasks atomic and with clear direction (e.g., Given these inputs, write a function that outputs this result. Use JavaScript, cite any examples pulled from, and talk through your decision-making process usually works very well).

¶02. The Problem Space

Since I have a decent amount of experience with AI in the coding domain, so I wanted to challenge myself with something outside of computers and code. I also wanted the project to be an attempt at solving a real-world issue in my life. The problem space I chose was my meditation practice and the very real desire to make that time as free from electronics as possible.

Mindfulness seems to have caught on in the cultural zeitgeist over the past decade. With services and apps like Happier, Headspace, Waking Up, and Calm, it has never been easier to start meditating. I’ve been using all of these apps over the course of the past five years and, while immensely helpful and supportive, my practice has started to shift away from app-based instruction or timers. A few in-person courses, some virtual day-long retreats, and finding that I am only using the silent meditation timer that comes bundled with one app or another. In addition to this shift, there are other issues that arise with using the apps that are not beneficial to me:

- The push for gamification, such as streaks, metrics, cutesy animations, and social sharing. I meditate to work with my mind and the gamification of this practice feels orthogonal to the actual practice.

- Subscription costs, which have added up to hundreds of dollars a year.

- Tracking and data-mining of my usage. I am a privacy- and security-focused developer at my core and the ubiquity of companies pulling data from our electronic devices makes me cautious about allowing new tech into my life.

- One device does many things. My phone is so much more than my phone. I can bank on it, communicate in numerous ways, listen to the latest album or podcast, take pictures, record videos and audio, figure out my schedule, etc. This is largely beneficial, yet there is a high likelihood of being distracted even before I sit down on the cushion.

What would it look like with a return to simplicity approach? Are single-purpose devices any better? Are tangible, imperfect experiences preferable to polished, 2-D experiences? Can I reclaim agency from tech companies? Can I reduce my monthly bills? Am I missing the point of meditation completely by focusing on my aversion?

¶03. Sketches A & B

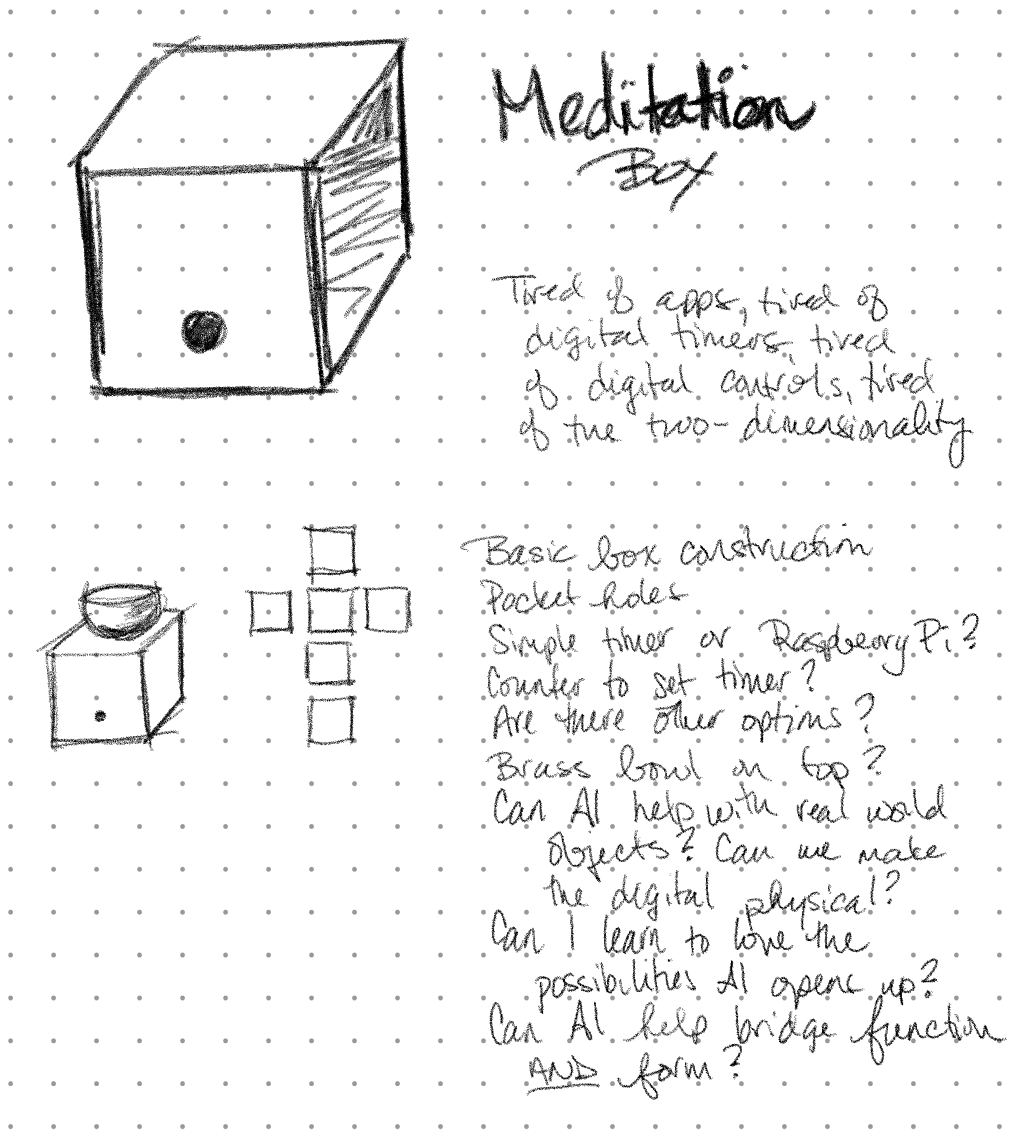

Sketches A and B were the precursors to the final sketch. Sketch A’s requirements were to build the project without the use of AI and Sketch B’s were to use AI exclusively, the only human piece to be the initiator. My initial sketch was rudimentary:

At this point, I was still exploring using technology to build the inner workings of my meditation box. During the initial presentation, a fellow student suggested I build it entirely without tech, as the possibilities for improvement and enhancements may be overwhelming. With Sketch B, this was the problem space I worked in. Four AIs (Claude, ChatGPT, Venice, and Gemini) were used to create an analog meditation box.

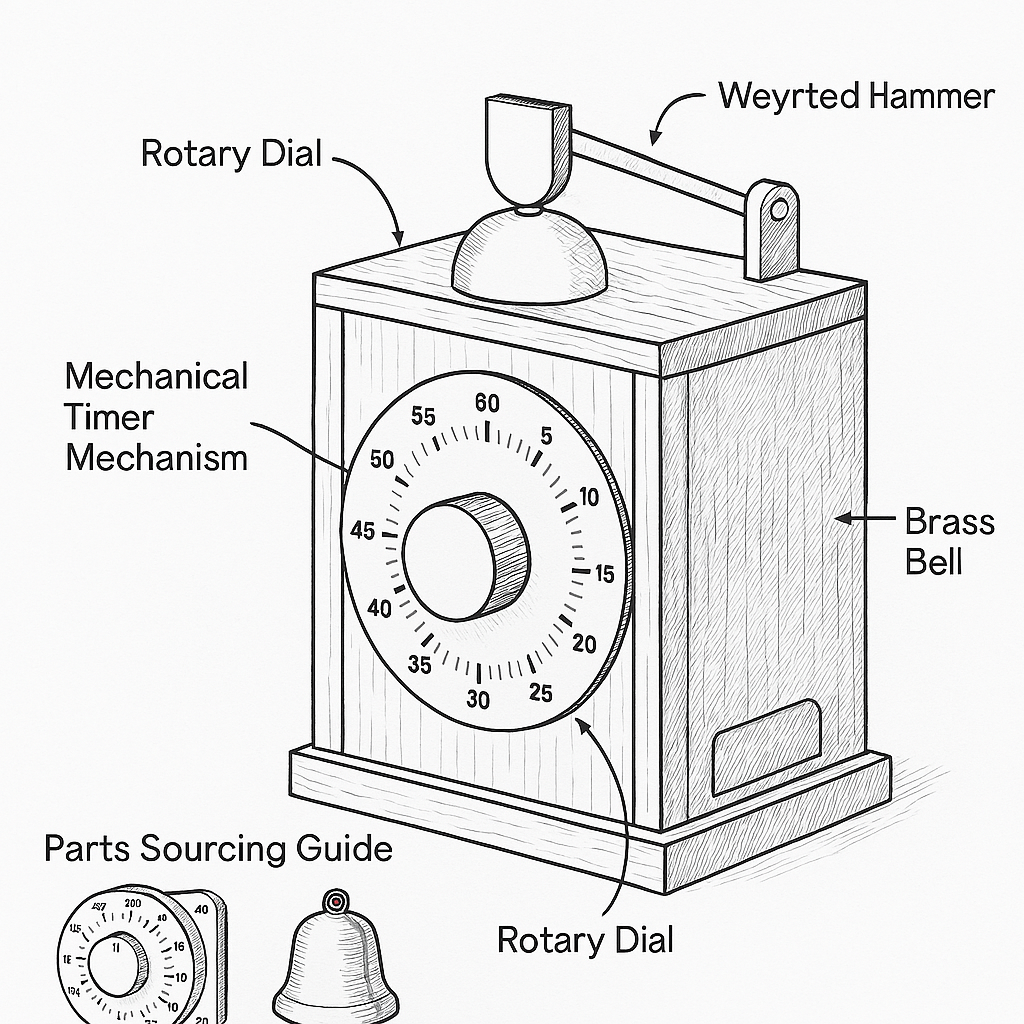

Regardless of platform and image generating capabilities, usable build plans could not be created. This created frustration for two reasons: mechanical concepts are difficult to understand without visual representation, and new-to-me concepts weren’t explained or defined at the outset. However, once I asked the platforms to provide citations and explain their reasoning, there were a few new concepts and terminology that I was able to search for outside of the platforms to get a visual.

The refined prompt was entered into each platform an additional two times. Given that the prompt did not change, it would stand to reason that the output would be the same between iterations. However, this was not the case. Results were somewhat similar, but construction methods, materials used, and level of complexity varied too greatly to have confidence that the platform presented the most optimal solution to the prompt.

Additional issues that arose was the lack of image generation. Of the four platforms, ChatGPT seemed to be the only one that came close to something usable. However, given the below image as the one that came closest to being accurate, there isn’t enough detail to build this example. The other platforms generated either rudimentary images or nonsensical images.

The conclusion I reached after completing Sketch B was that using AI to solve problems or projects in the physical world was a frustrating experience. Due to my lack of knowledge in this domain space, I was unable to tell if the results were accurate or not, which required me to do additional research to vet what the platforms generated. Yet, the AI platforms were instrumental in discovering new-to-me concepts and methodologies.

¶04. Final Sketch

¶04.01 The Process

The results were not promising. Summing up my conclusions from Sketch B, I found that using AI in real-world, physical spaces to be problematic. Over the next few weeks, I worked consistently on the project, using Claude2 as a sort of interlocutor to guide me in smaller, more atomic tasks. Eventually, I found YouTube videos and blog posts to be more helpful in explaining how analog time pieces work, as the visual display was key to understanding the underlying mechanics. As I explored the world of analog time keeping, I used cardboard to construct various timer pieces. Doing so showed that constructing an analog time piece out of wood, the material I wanted to use for the final construction, would be much larger than I wanted. This was due to my limited experience and set of woodworking tools. Smaller time pieces require finer precision tooling.

Knowing that I couldn’t build the meditation box as I originally desired, I went back to Claude to ask about alternative methods of constructing the time piece. The most promising solution was to purchase an analog kitchen timer, find a way to affix it to the interior of the box, and basically just zhuzh up the timer. A visit to Home Depot to buy a timer, and a fairly in-depth conversation with an employee there about possible ways to construct my box, resulted in a timer, a dowel, PVC pipe, and a renewed hope for my meditation box. Arriving home, I ran the timer for one minute after unpacking it and the metallic ticking of the clock escapement3 with the loud, garish ending bell made my hope fall and despair rise. Part of meditation and mindfulness is learning to be present with whatever arises in one’s session; I just don’t think listening to that clicking and the ending bell would ever amount to a pleasant (or even neutral) meditation session. This version of the meditation box would sit on my shelf unused.

¶04.02 Project Change

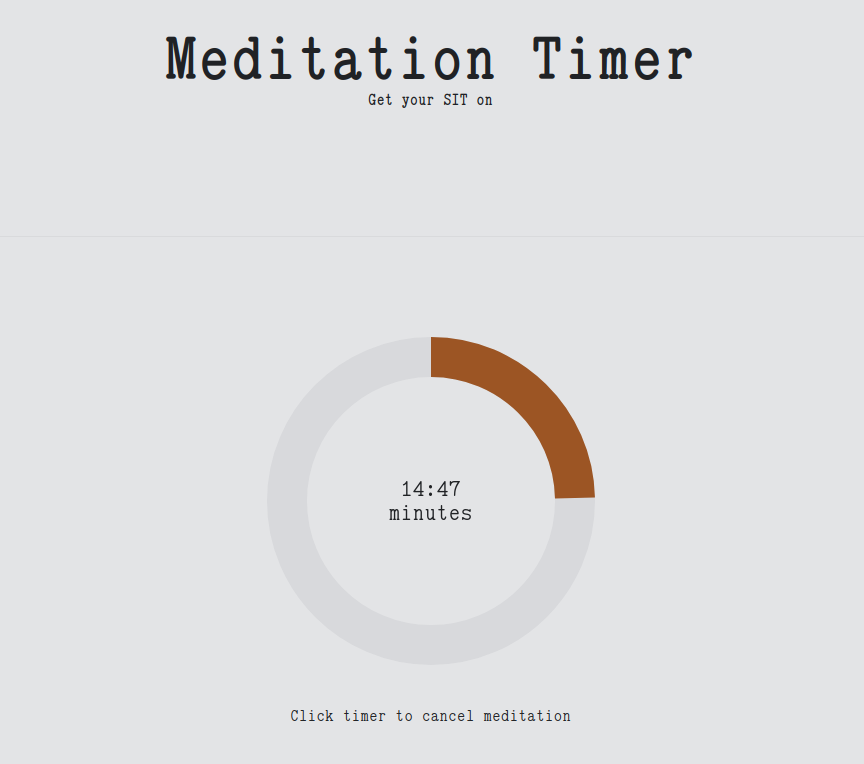

This was the point where I knew the original idea had to be scrapped. I came up against AI’s limitations, as well as my own, and didn’t have the skills or tools necessary to build a completely analog meditation box. So, as is my penchant, I adapted. What problems could I solve if I approached things differently? Many of the problems I wanted to solve with an analog device focused on the apps themselves (e.g., gamification, cost, tracking, and privacy), while only one issue dealt with the device. Could I use my software skills with Claude to create my own meditation timer?

And so I spent a weekend building a meditation timer here on this site. It was the first time I’ve asked AI to build an entire app from scratch, and iterating over the code was an interesting experience. This is where the delta between working in a problem space with limited to no experience and one with robust experience is the greatest. Claude initially gave me a fairly decent visual UI with which to interact with the timer, based on the previous discussions about the meditation box. It wasn’t at all in line with the design of this site, nor of my own personal aesthetic. Having the skills necessary to change what I disliked made the experience much more pleasant than the frustrating one with the analog meditation box.

My app solves many of the problems I hoped the analog meditation box would solve. There is no tracking on this timer, other than the privacy-focused analytics on this site that records when the page has been visited and from which country. There is no gamification and no habit streak monitoring. It doesn’t cost me anything other than what it takes to run and host my site, which I do regardless of the meditation timer. It didn’t solve the “no screen” requirement but I wondered what would happen if I built a box to put in an old mobile phone. Of course, I had to build a box prototype. It isn’t exactly what I had in mind. Further prompting with Claude led me to Matthias Wandel’s videos4, which show how he built a therapy timer using a Raspberry Pi Pico inside a small, wooden box. Having iterated through all the above and seeing what Wandel created, I think I should have built my own meditation box using tech for the innards.

¶05. Four-tiered Analysis

¶05.01 Creativity

Creativity is one of the most fascinating areas regarding AI. The surface area of happenstance and possibility is exponentially increased. AI goes beyond simple search engine functionality when in the research phase. It allowed me to follow my whim as I was exploring. At the same time, AI acts as an interlocutor, asking probing questions to further refine my thinking or opening up new avenues I did not know existed. In Sketch B, we were limited in our creativity with AI, simply due to the constraints. While the collaboration between me and AI didn’t produce the desired outcome, which one may call a failure, it didn’t feel like a failure. Instead, the dialogue between Claude and me contributed to understanding concepts (e.g., I had no clue what an escapement was and how it is used to keep track of time) and trying new ideas.

¶05.02 Accuracy

Accuracy is still an area that requires discernment. When using AI to write code, this is fairly easy for me, due to being a software programmer. In other areas, I must spend more time vetting the output of AI. Both Claude and ChatGPT alert users to this fact at the bottom of every prompt box: “[Claude|ChatGPT] can make mistakes.” Perhaps a better warning would be AI WILL make mistakes, eh?

Comparing the analog meditation box and the meditation timer app, the gamut between accurate and false answers is on full display. The results of the analog project did not conform to my own aspirations, information, or data important for my project to succeed. Yet, utilizing AI for the meditation timer app project most certainly did. To me, this indicates that accuracy is a two-pronged entity: that of the AI’s results and the user’s ability to separate the truth from errors.

¶05.03 Comprehensiveness

AI, in all iterations of both projects, most certainly provided a comprehensive overview. Not so much initially, but with the continued use of revisiting the same topic repeatedly, the responses seemed to build upon itself and the relevancy of information increased. Claude and ChatGPT excelled at this. Venice and Gemini less so, though I suspect with Gemini’s new model and ability to link to other services from Google, this isn’t the case any longer. Claude will often ask follow-up questions or suggest a similar thread to explore after each response, thereby leading to further comprehension.

¶05.04 Role Support

I’ll never have the relationship that Joaquin Phoenix had with the Scarlett Johansson-voiced AI in HER5 but it does support me when I use it. One of the reasons Claude has become my AI of choice rests in the default response tone and the capacity to change that tone (Claude names this Styles, which “allow[s] you to customize how Claude communicates, helping you achieve more while working in a way that feels natural to you” (Configuring and Using Styles). This isn’t all that helpful when tackling programming tasks, but when I use Claude as an interlocutor for my own interiority, I use two separate Styles:

- Compassionate Companion:

- Communicate with deep empathy, warmth, and unwavering support through kind and uplifting language._

- Zen Bouncer (based on Dalton and Wade Garrett from 1989’s Road House):

- Deliver razor-sharp, analytical insights through direct, uncompromising communication.

Sometimes I can’t take in what someone is trying to tell me due to the manner in which they tell me and I have found this to be true with AI. The ability to change the tenor of AI changes my interaction with it. New ideas and insights have been born by contrasting the responses of each Style to the same question, as well. Sometimes, though, it doesn’t matter how a piece of information is delivered; I still want hear it.

¶06. Final Thoughts

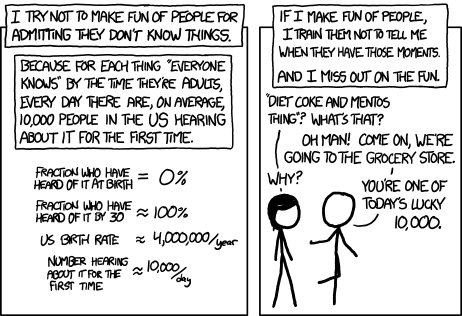

Before starting this semester, I used AI begrudgingly. Now I am beginning to see what is possible with AI, seeing it more than just a tool to write code I don’t want to write. It’s a—I hate using this term, but it feels apt—force multiplier. Instead of atomic, one-off prompts, I’m exploring bigger picture topics, asking more philosophic questions. Claude is the friend who never treats you like an idiot for asking the dumbest possible question for the seventeenth time in a row. Claude is the one that says, “You’re one of today’s lucky 10,000.”

The ability to hold context is one of the most profound benefits of AI. Information and examples build upon each other, and subsequent prompts do not have to be as exact. I find the ability for AI to infer things to be remarkable. It is important to vet AI outputs, and to not anthropomorphize it. Privacy, copyright issues, and data mining are still areas that must be discussed and considered, in both the building, and use, of AI. The ethics, morality, and economics are of a bigger concern. But I cannot deny that using AI over this past semester has affected me. The world and my capacity to do the things I want in it feel larger, as if we’ve all been granted some superpowers. As with anything and anyone in life, discernment is key.

Over the past two years, I’ve spent a considerable amount of time trying to human better. The concepts and skills I have learned around mindfulness and meditation while taking Chris and Tajay’s courses have been my own force multiplier. When it comes to technology, I have found that I became a person that was resistant to new software or services, due to the enshittification6 that has plagued my industry for decades now. Meditating regularly and imperfectly practicing mindfulness has opened me up. I may be flawed, but this doesn’t equate to wrong or bad or evil. Instead, I have found that it generates curiosity, fear, and excitement for the unknown, for things that once would come up against my own thought of who I am, and that was a challenge I didn’t want to deal with. It was Chris and Tajay’s Compassion, Science, and the Contemplative Arts that truly unlocked the ability for me to be kinder to myself, to no longer believe that I am unlovable7.

While I can’t point to an exact scenario in these project sketches where I can say that mindfulness, ethics, or the Buddhist view of flourishing factored into my design, I can state that this fundamental shift in how I feel about myself came about because of mindfulness and the Buddhist view of flourishing. As I have learned throughout these many courses on Buddhism, meditation, compassion, and the science behind it all, the Middle Way is just a good default to have. AI is neither saint nor sinner, the doom of humanity nor its savior. In its current iteration, AI is a tool. This is likely to change in the future, and this is where caution, mindfulness, and legal guardrails must prevail over a race to be the first to create truly artifical intelligence. But right now, approaching AI with excited skepticism, I am cautiously optimistic for what is possible.

¶Works Cited

- “Configuring and Using Styles.” Anthropic Help Center, support.anthropic.com/en/articles/10181068-configuring-and-using-styles. Accessed 11 May 2025.

- “Enshittification.” Wikipedia, Wikimedia Foundation, 6 May 2025, en.wikipedia.org/wiki/Enshittification. Accessed 11 May 2025.